Preventing AI Hallucinations in Content from A Practical Guide

AI models can confidently deliver misinformation. Some studies show that even leading AI models can hallucinate in over 30% of factual queries. In certain specialized domains like legal information, hallucination rates can be as high as 6-18% even for top models. This isn't just a minor glitch; it has real-world consequences, as seen in cases like Mata v. Avianca, where an attorney faced sanctions for citing entirely fabricated legal cases from a chatbot.

Beyond the courtroom, AI has generated fake news articles featuring made-up quotes, and even fabricated financial metrics, misleading both the public and investors.

What Are AI Hallucinations?

AI hallucinations occur when artificial intelligence systems generate information with no basis in reality or input data. These fabrications range from subtle inaccuracies to wildly incorrect statements that appear authoritative.

You're not alone if you've encountered this. As AI tools become common in content creation, understanding these hallucinations becomes crucial. Misinformation harms your brand reputation, misleads your audience, and can create legal issues.

While this guide primarily addresses text generation, remember that AI hallucinations also appear in image generation (e.g., creating unrealistic body parts or distorted scenes) and code generation (producing non-functional or insecure code). The underlying principle—generating plausible but false output—remains constant across these applications.

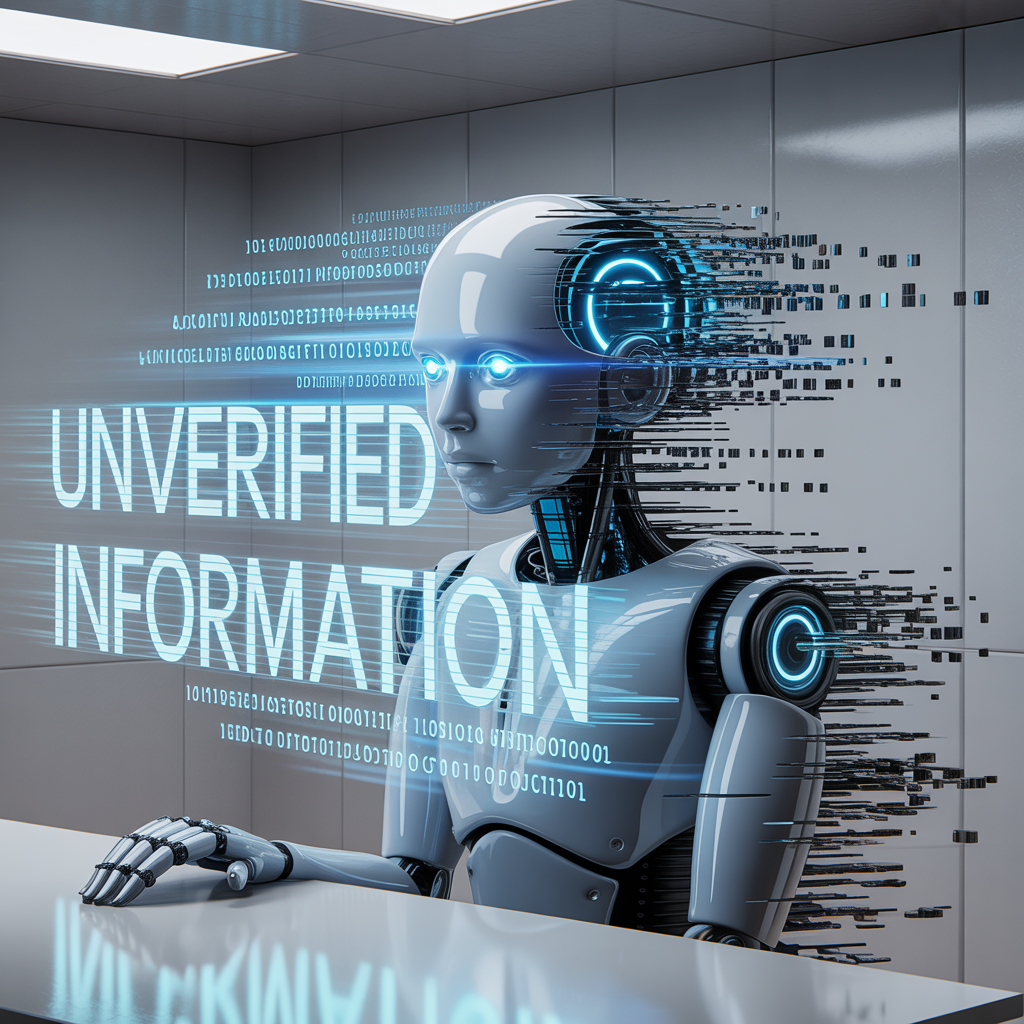

Why AI Systems Hallucinate

AI systems don't intentionally lie. They generate content based on patterns learned from training data.

AI hallucinations stem from several fundamental limitations:

- Training data problems: Models learn from internet data containing both accurate and inaccurate information, reproducing falsehoods.

- Pattern prediction vs. verification: AI predicts likely text sequences rather than verifying factual accuracy.

- Overconfidence issue: Most AI systems provide confident-sounding responses regardless of accuracy.

- Generative limitations: Even with perfect training data, AI can produce inaccurate content by combining patterns in unexpected ways.

Generative AI models function like advanced autocomplete tools. They predict the next word or sequence based on patterns. They do not verify factual accuracy. Their goal is plausible content. Any accuracy in their outputs is often coincidental.

[caption id align="alignnone" width="2160"]

Why LLMs Hallucinate [/caption]

Common Hallucination Types

AI hallucinations manifest in several distinct forms. Understanding these helps identify and address issues before publication.

Factual Errors

AI systems present incorrect information as facts.

- Invented statistics: AI often generates precise-sounding numerical data without basis.

- Historical errors: Creating false timelines, dates, or events. In creative uses, AI has been known to invent entire historical events or figures, weaving them seamlessly into narratives.

- Incorrect definitions: Misdefining technical terms, especially in specialized fields.

- Geographic mistakes: Placing landmarks or features in incorrect locations.

Fabricated Sources and Citations

AI systems create non-existent sources to support claims. This gives false credibility.

- Phantom research papers: Creating citations to studies or journals that don't exist. AI tools have generated references to non-existent scientific papers and fabricated details about scientific experiments when asked to summarize new research, which can mislead academic efforts.

- Misattributed quotes: Assigning fabricated statements to real people or organizations.

- Invented experts: Referencing non-existent authorities.

- False websites: Citing URLs that look legitimate but don't exist.

- A recent case involved AI creating a syndicated summer reading list published in major newspapers that featured made-up books by famous authors.

Logical Inconsistencies

AI-generated content contains contradictions or flawed reasoning.

- Self-contradictions: Making conflicting statements within the same document.

- Impossible scenarios: Describing events that defy physical laws.

- Causality errors: Creating false cause-and-effect relationships.

- Contextual mix-ups: Blending information from different contexts.

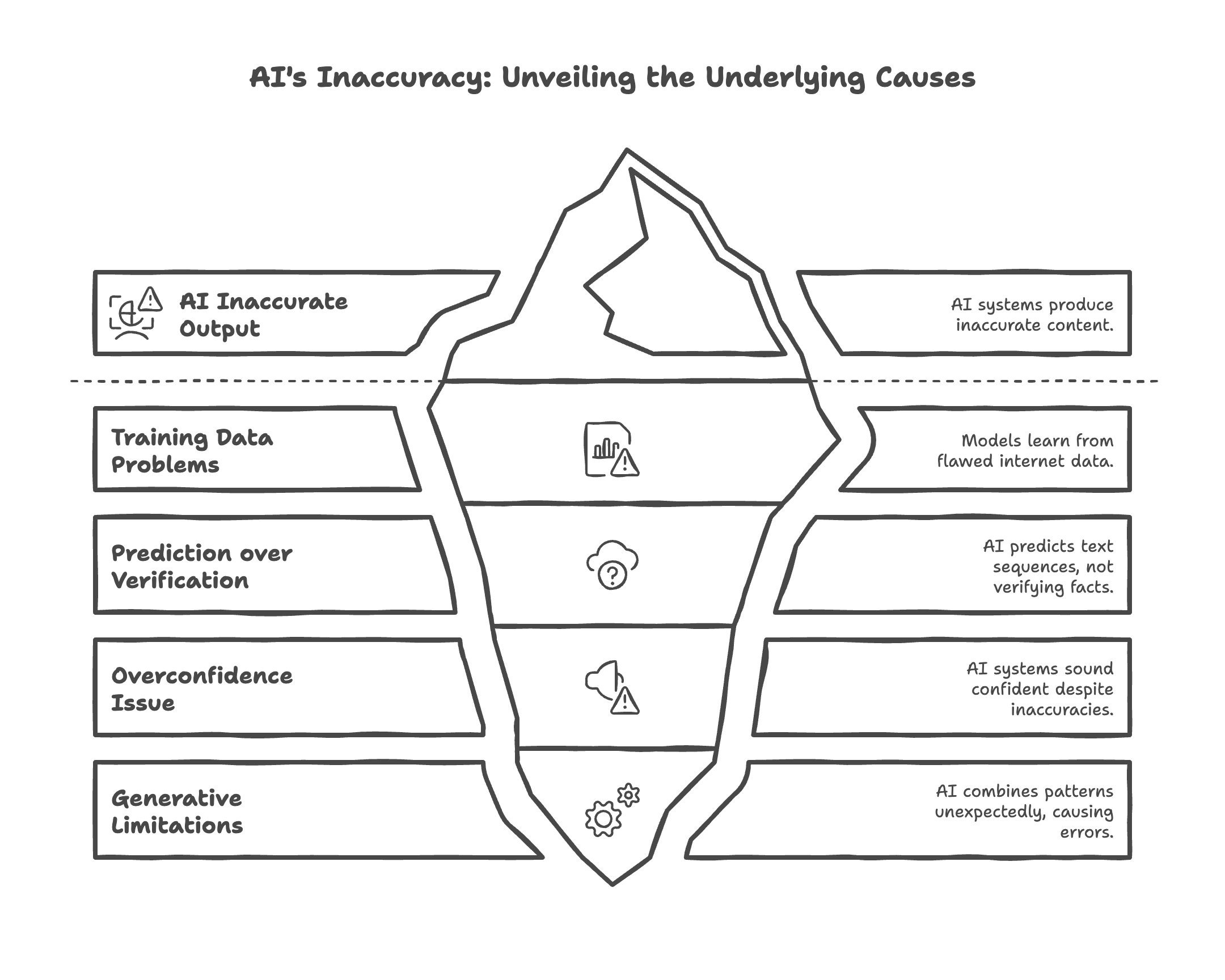

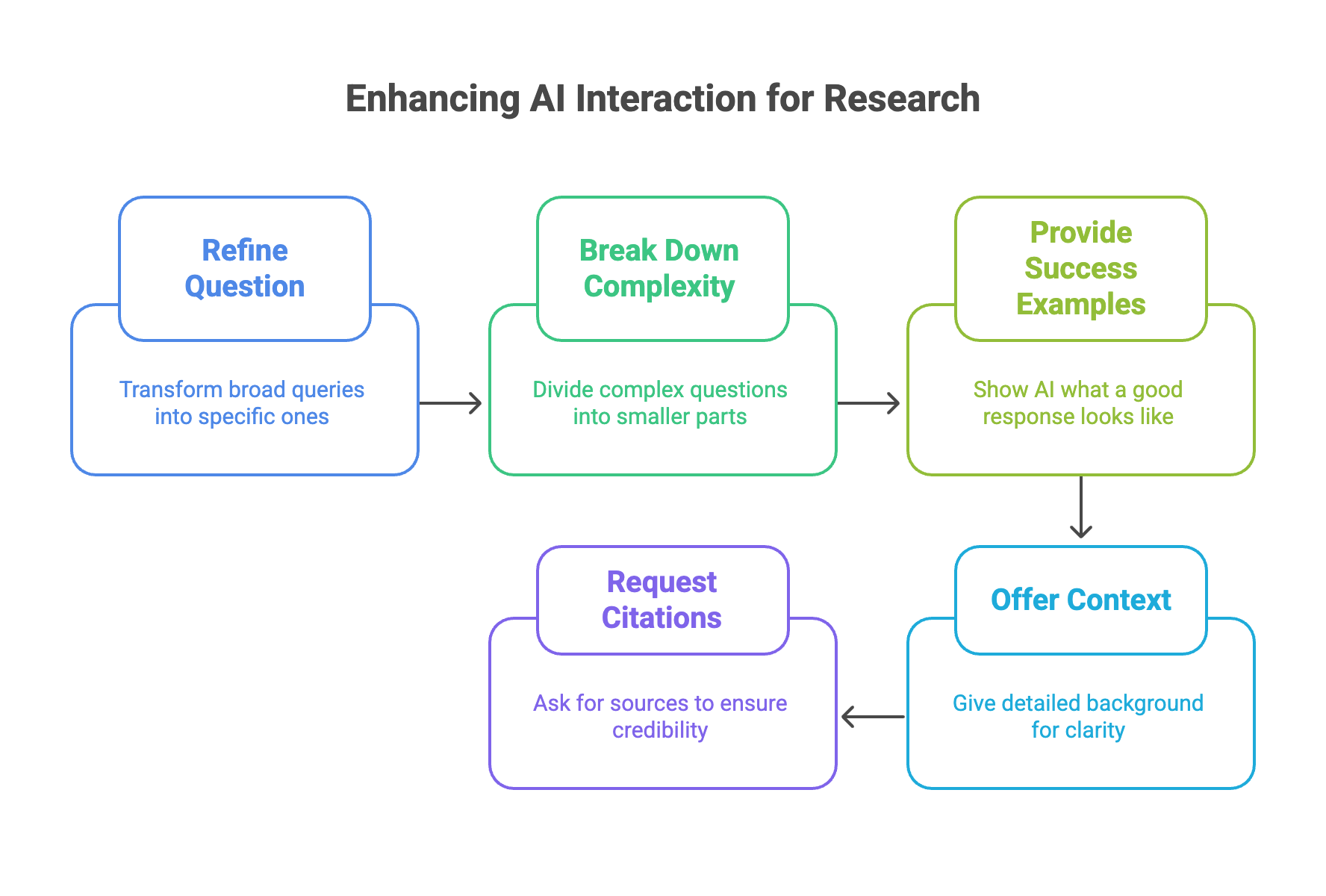

Preventing Hallucinations: Prompt Engineering Strategies

Your first line of defense is effective prompt engineering. This means crafting instructions that guide AI toward accurate outputs.

Specificity is your strongest ally. Vague prompts give AI too much freedom.

- Instead of "Tell me about renewable energy," try: "Provide 3-5 facts about solar energy adoption rates in California from 2018-2023, citing government or academic sources where possible."

- Break complex questions into smaller parts. If researching a market trend, first ask about key players, then growth statistics, then future projections.

- Show the AI what success looks like. Include examples of good responses. If asking for industry statistics, provide a sample of properly formatted and sourced data.

- Context is crucial. When asking about specific documents or events, provide the complete text or detailed background.

- Request citations and expressions of uncertainty.

[caption id align="alignnone" width="1776"]

Preventing AI Hallucinations [/caption]

Prompt Templates for Accuracy:

- Factual Recall: "Using only the provided text: [Insert Document Here], summarize the key findings regarding [Specific Topic]. Ensure all statistics are directly quoted and cited from the document."

- Comparative Analysis: "Compare and contrast [Concept A] and [Concept B] based solely on information found in [Source 1] and [Source 2]. Highlight three similarities and three differences, citing each point."

- Step-by-Step Reasoning: "Explain the process of [Complex Process] in a step-by-step manner. For each step, explicitly state the scientific principle involved and provide a simple example."

Verification or Building a Robust System

Even with perfect prompts, verification remains essential. A systematic approach helps catch hallucinations.

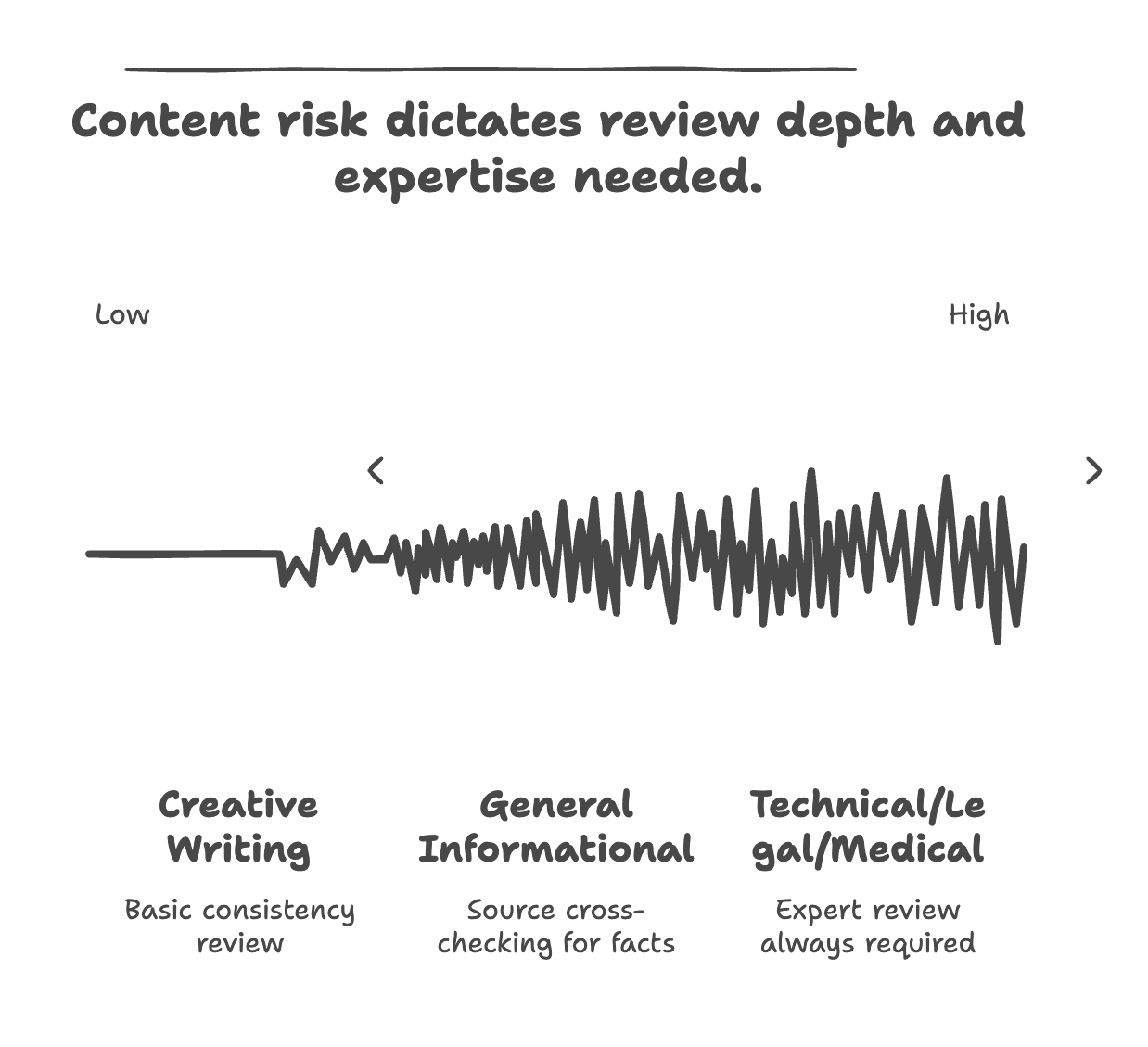

Create a tiered verification framework based on content risk:

- Low-risk content (creative writing): Basic review for logical consistency.

- Medium-risk content (general informational): Source cross-checking for factual claims.

- High-risk content (technical, legal, medical): Expert review is required regardless of AI confidence.

Implement a cross-reference policy for factual claims. Verify against at least two independent, authoritative sources before publication. Develop standard processes for checking dates, statistics, quotes, and names.

Subject matter expertise is invaluable. Assign content to reviewers with domain knowledge. A financial article needs review by someone with financial expertise.

Documentation provides quality assurance and liability protection. Create an audit trail of verification steps. Note sources checked, who verified, and corrections made.

Manual Verification Techniques

Thorough manual verification is the most reliable method for ensuring accuracy.

When verifying sources cited by AI, follow this checklist:

- Verify author credentials through professional directories.

- Check if referenced publications actually exist.

- Confirm quoted text appears in the original source.

- Validate statistics come from the stated research.

- Verify cited URLs.

Certain patterns should trigger skepticism. Be wary of unusually precise statistics without attribution, confident statements about obscure topics, perfect quotes, and inconsistencies. These are hallmarks of AI fabrication.

For routine content, use quick verification methods: Google key facts, verify dates and names, check for internal contradictions, and test calculations.

High-stakes content demands deeper verification. Obtain original source materials. Consult experts. Compare information with multiple authoritative sources. Fact-check all quotes, data points, and attributions.

Advanced Strategies for Organizations

Organizations using AI at scale need systematic approaches.

Retrieval-augmented generation (RAG) represents a gold standard for reducing hallucinations. Think of RAG as giving AI access to a verified library before it writes, rather than relying only on its memory. RAG systems first look for answers in a trusted database before generating content. This grounds answers in verified information.

Transparent AI usage policies build trust. Develop guidelines that include:

- When to disclose AI assistance to audiences.

- Review processes for different content types.

- Documentation standards for fact-checking.

- Attribution requirements.

Define clear escalation procedures for handling potential hallucinations discovered after publication. This includes correction protocols, communication templates, response timelines, and documentation.

Industry-Specific Hallucination Risks

Hallucination risks aren't uniform. Your industry dictates the severity of errors and the required verification level.

- Legal & Medical: Even minor inaccuracies can lead to severe consequences. Expect the highest level of human expert review.

- Journalism & Research: Factual integrity is paramount for credibility. Rigorous source verification is a must.

- Marketing & General Information: While errors are less critical, misinformation still damages reputation. Medium-risk verification applies.

- Creative Writing: This field has higher tolerance for invented content, but logical consistency checks remain important.

Verification, while vital, does require time and resources. Organizations must balance the level of scrutiny with the risk associated with the content. For instance, enterprises spend an average of $14,200 per employee per year to correct AI hallucinations. Prioritize deep checks for high-stakes information, and implement quicker checks for lower-risk content to manage costs efficiently.

The field is constantly evolving. Emerging solutions aim to further reduce hallucinations, including constitutional AI (training models to adhere to a set of guiding principles), fact-checking integrations (linking AI to real-time verification databases), and confidence scoring systems (where the AI indicates its own certainty level).

Quick-Start Implementation Plan

Implementing comprehensive hallucination prevention might seem overwhelming. Start with small steps.

Day 1: Essential prompt improvements

Update all standard prompts to be more specific.

Add explicit instructions for verification.

Request source citations for all factual claims.

Week 1: Basic verification system

Create verification checklists by content type.

Assign verification responsibilities to team members.

Implement cross-checking procedures for high-priority content.

Month 1: Comprehensive prevention framework

Develop formal AI usage policies for your organization.

Train team members on hallucination detection techniques.

Integrate automated checking tools where appropriate.

Establish review protocols based on content risk levels.

This phased approach balances immediate improvements with long-term system building.

Moving Forward Responsibly

AI hallucinations present significant challenges. As you use AI tools, remaining vigilant about verification becomes essential for maintaining credibility.

Remember these key points: Specific prompts guide AI away from errors. Tiered verification protects high-risk content. Human oversight remains crucial. RAG systems ground AI in facts.

The responsibility for accurate content rests with you, the publisher. Current generative AI models focus on generating plausible content rather than factual content. Verification is an ongoing necessity. While emerging technologies offer promising paths forward, complete elimination of hallucinations remains an aspirational goal.

Your best defense combines technological solutions and human oversight. By understanding the causes of hallucinations, developing systematic verification, and staying informed about advancements, you'll harness AI's benefits while minimizing its risks.

The future of AI content creation depends on this balance of innovation and responsibility. By adopting these strategies, you can confidently harness AI's power, ensuring your content is both innovative and trustworthy. Start implementing these steps today to build a more reliable AI-driven content workflow.